There is barely any doubt among nonprofits regarding the value of monitoring and evaluation (M&E) as a mechanism to help organisations learn from their experiences and stay accountable for results. That, however, doesn’t translate into all nonprofits actually adopting M&E as a way of life.

While there are several reasons why many nonprofits don’t monitor or evaluate their work, chief among these is the difficulty in deciding whether to hire external expertise or use existing staff for M&E.

While hiring external expertise may seem like an expensive proposition, identifying the right individuals or teams internally to take on the responsibility is also a tough task.

However, this approach to M&E arises from a flawed understanding of the concept itself—people often fail to see that it conflates multiple activities that must be divided between internal and external entities based on each organisation’s needs.

Decoding monitoring and evaluation

The first important distinction that needs to be made is between monitoring and evaluation.

Monitoring is “a continuing function that uses […] collection of data on specified indicators to provide management and stakeholders of an ongoing intervention with indications of the extent of progress.” It is usually an internal management activity conducted by the nonprofit.

Ideally, a monitoring system should have a small enough number of indicators that can be reviewed frequently and acted upon by the programme manager and/or team without hiring additional staff or consultants.

The term M&E conflates multiple activities that must be divided between internal and external entities based on each organisation’s needs.

Evaluation, on the other hand, is “an objective assessment of an ongoing or completed project, programme or policy, its design, implementation and results.”

Evaluation can be further sub-divided into four phases: planning, data collection, analysis and dissemination. Responsibility for these four phases can be assigned between the nonprofit and an external evaluator (an individual consultant or a firm) in multiple ways.

Choosing what’s best for you

Below I discuss three nonprofit scenarios and the option best recommended for each. These options are not exhaustive, but are intended to help nonprofits decide on the degree of internal and external involvement in their evaluation function.

If your nonprofit has a small team that is already overstretched, and if you do not work with highly sensitive issues or populations, then an external evaluation is likely to be the best option for you.

However, if you do work with issues or populations that are sensitive, this is not the ideal option because your involvement will be rather limited. For example, an evaluation of an HIV prevention campaign targeted at sex workers is much better conducted by data collectors with whom the respondents have built rapport rather than by an external evaluator. Similarly, an evaluation of a programme that targets children may be better conducted by the nonprofit themselves for the same reason.

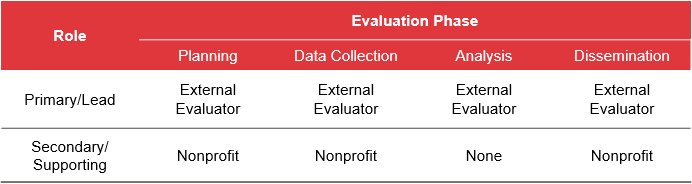

This table describes the division of roles between an external evaluator and the nonprofit in Option 1:

Related article: M&E | Five things you are doing wrong

- The planning phase, to decide the questions that will guide the evaluation

- Ensuring participation of the target population(s) and other stakeholders in the data collection

- Addressing any concerns regarding the data collection process

Nevertheless, the lead role is played by an external evaluator in all four phases.

Tip: If you choose Option 1, make sure you select an evaluator who is receptive to including questions that you consider relevant in guiding the evaluation.

This might be the best option for you if:

- your nonprofit has a sizeable number of employees, especially if they include field or call centre staff

- you work with sensitive issues or populations

- you have limited funds and only plan to conduct a one-time evaluation

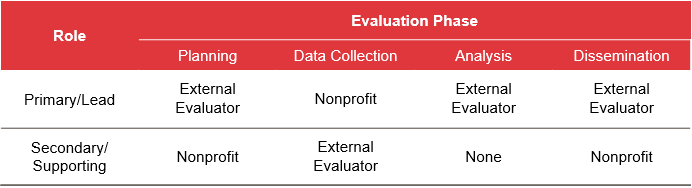

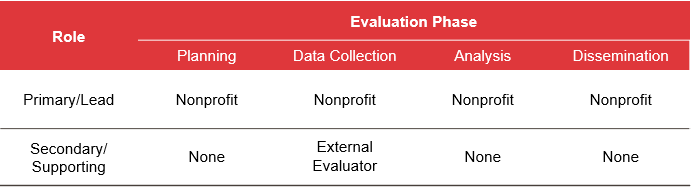

This table describes the division of roles between an external evaluator and the nonprofit in Option 2:

The only difference between options 1 and 2 is in the data collection phase. In Option 2, data is collected by the nonprofit themselves, typically by their field staff. However, the external evaluator should still support data collection in order to be able to contextualise the data collected (which will be necessary for analysis) and to ensure the quality of the data.

Because data is collected by staff who have already established trust with respondents, Option 2 is preferable to Option 1 for programmes that work with issues or populations that are sensitive.

Even for issues or populations that are not sensitive, there is an advantage to having data collected by staff who are familiar with the programme as they are quicker to understand the data collection tools and terms used by the respondents, and can gather more detailed feedback. Option 2 may also be the most cost-effective for a one-time evaluation.

Nevertheless, Option 2 also has its disadvantages. In cases where the data collectors are the same individuals responsible for delivering the programme, both they and the respondents may hesitate to report negative results.

It could be difficult to eliminate this bias even with supervision and validation of data collection by the external evaluator. Also, staff could find it challenging to schedule data collection alongside their other responsibilities.

Tip 2: If you choose option 2, make sure you have the buy-in of both the data collectors and their managers, and that they are fully aware of how much time is required of them and when.

Related article: How to use data to improve decision-making

Internal evaluation is likely the best choice for you if your nonprofit plans to conduct evaluations regularly. Here, the nonprofit plays the lead role in all phases of the evaluation, and has sole responsibility for planning, analysis and dissemination. The external evaluator has an important supporting role to play in data collection.

Internal evaluation is a better choice for nonprofits who plan to conduct evaluations regularly than for those approaching it as a one-time activity.

In this option, the nonprofit asks the external evaluator to support its evaluation by using the same criteria for selecting the sample population and the same data collection tools as the nonprofit uses to validate the data collected.

The results from the two samples (by the nonprofit and the external evaluator) are then compared with one another and discrepancies, if any, are identified. These discrepancies could arise due to either the nonprofit’s or evaluator’s biases, vaguely worded questions, and so on.

It is important for the external evaluator and nonprofit to discuss the possible reasons for such discrepancies, and to revise the tools, retrain staff and repeat the data collection as necessary to make sure that they are addressed.

This table describes the division of roles between an external evaluator and the nonprofit in Option 3:

Internal evaluations should be conducted by a team that is separate from the programme team and reports directly to the head of the organisation. This is for two reasons:

1) It allays concerns about the objectivity of the exercise. By separating the two teams, the data collected through the evaluation can be cross-checked against the monitoring data collected by the programme team.

2) The skills required to conduct an evaluation are typically different from those required for programme management.

For nonprofits who choose Option 3, it is important to hire individuals with experience in social science research/evaluation methods, data analysis and writing, and to give them the dedicated time required to conduct an evaluation.

Because it requires the hiring of a separate team, Option 3 is a better choice for nonprofits who are planning to conduct evaluations regularly than for those who are approaching it as a one-time activity.

The advantage of this option is that it enables nonprofits to make their evaluations responsive to issues that emerge during programme implementation. With options 1 and 2, the nonprofit has to do considerable preparatory work (in terms of selecting and contracting an evaluator, and agreeing on a timeline) before an evaluation can begin. Therefore, options 1 and 2 tend to be less flexible and take longer to deploy.

Tip: Option 3 works best when the evaluation team is independent enough to ensure the credibility of the evaluation, but integrated enough with the programme team to make sure that the results are disseminated frequently and inform decision-making.

Most nonprofits would benefit from conducting periodic evaluations that are responsive to the changes in their programme or the local context, and improving their operations and strategy as a result. However, Option 3 may not be immediately feasible for some, given the initial investment required in establishing an internal evaluations department.

Even for nonprofits who choose options 1 or 2, their participation in the planning and dissemination phases is crucial to ensuring that evaluations are responsive to their needs, and inform decision-making within the organisation.