The development sector has accepted and understood the importance of effective monitoring and evaluation (M&E) systems, as indicated by the investment in people, tools, and research that has mushroomed over the last two decades, worldwide.

While data does get collected and referred to in several programmes, most programme and M&E employees continue to take decisions based on discrete performance on key indicators.

A logical framework helps you understand the pathway from inputs to outcomes.

The purpose of the current piece is to demonstrate and explain how critical it is to see indicators in their relationship with each other, in order to accurately ascertain the status and effectiveness of a programme.

Related article: Making your programme design more equitable

A logical framework helps you understand the pathway from inputs to outcomes. It lays out each component of the intervention that aims to change different aspects of outcomes, eventually leading to a solution.

For example, for an intervention designed to improve learning outcomes in schools, one component could be teacher training, another could be using technology in classrooms, and yet another could be working with parents and other community members.

Most programme and M&E employees continue to take decisions based on discrete performance on key indicators | Picture courtesy – Pexels

Once the individual intervention components are decided, identify indicators which best measure the performance of each. While doing so, it is important that each of the indicators are discrete (that they measure separate outputs) and that all relevant indicators are included (for example, percentage of teachers trained, percentage of parents/community members engaged, etc).

Ensure that the rationale is logical, both for the programme and from a logic model point of view.

Post selecting indicators, ensure that each has a target or a benchmark which they will be measured against. Keep in mind that the targets you set are all for the same duration/ period of time. So, while sometimes targets set for the activities are annual, you may need to calculate them on a monthly basis. Ensure that the rationale for this is logical, both for the programme and from a logic model point of view. For indicators that measure cumulative performance, targets could be calculated on pro rata basis.

It helps to measure cumulative performance of the components. The beauty of these indicators is they tell you the overall performance of the programme – taking into consideration the challenges which may have hindered processes previously but which have been overcome or are being worked around.

Cumulative performance gives us inputs on not only targets, but also efficiency. They could also help you set and adjust expectations

For example, if your programme involves hosting sessions for parents in an education programme, the indicator – percentage of parent-sessions held against planned – can only tell you the performance level for that particular month. However, cumulative parents sessions held against targets help you to understand the implementation status up to that particular month. This enables the team to either applaud itself for being on target, or understand what will be required to ensure that which has been committed is met.

This way, the cumulative performance gives us inputs on not only targets, but also efficiency. They could also help you set and adjust expectations with external stakeholders and ensure there is no undue pressure on your team.

Related article: Test and prepare before you implement

Since each indicator measures the performance of a different output, they might all have different measuring units. For instance, teachers trained may be calculated in percentage value, and classrooms that are tech-enabled in numbers.

Therefore, to make data comparable, each of the indicators needs to be scaled ranging from 0 to 100 percent or 0 to 100 – with 0/0 percent denoting lowest performance and 100/100 percent indicating that the target has been achieved.

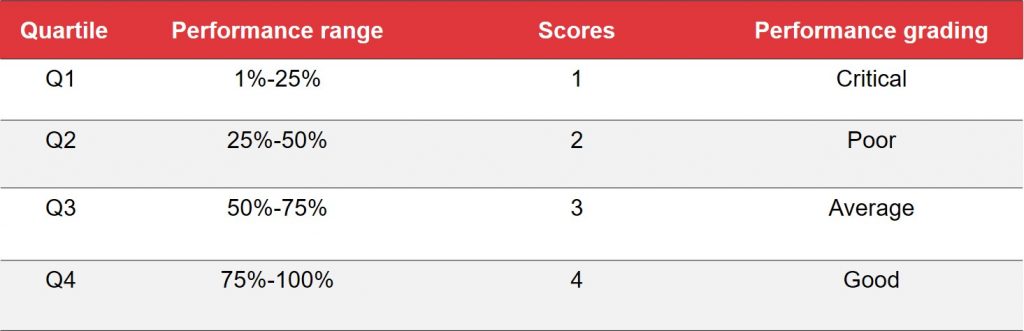

Subsequently, this can be split into four quartiles, with scores given to each quartile – the better performance gets a higher score.

For each of the components, index score can be calculated for each project. Index scores are the average of the scores of all the indicators within that intervention component.

For example, for a training component (that is part of an intervention to improve learning outcomes in schools), the indicators could be: percentage of teachers trained + percentage of community workers trained + percentage of volunteers trained. Therefore, the index score of this component will be an average of all these three indicators.

In calculating the average, equal weights can be assigned to each indicator and the answer rounded off to the nearest whole number.

Once this average (arithmetic mean) is calculated for each intervention component, you can assign weights to components based on time, resources, and importance of the activities within that component, and calculate composite index score of that project.

If you think each component is equally important,then simply calculate the average of all components without assigning weightage. Then round this off to the nearest whole number.

With the help of this composite index score one can understand the overall performance of the project as it combines all the outputs of the programme intervention and measures it against their respective targets. This helps the programme and management team to identify areas of low performance, review the reasons, and strengthen programme implementation.

It also becomes easy to compare the performance of different projects having the same set of outputs and outcomes but being implemented in different geographical locations.

Related article: M&E: Five things you are doing wrong

Although it can be useful to understand the performance of your project with a single digit number, it may sometimes not reflect an accurate picture, as each project has its own start and end time. And so, the implementation process may vary from one project to another.

The focus should be on how we use data in multiple ways so that it becomes relevant for programme teams and benefits the end-user.

Therefore, it is good to study the trends for these scores. One can also give a traffic colour coding of the scores; this allows one to see the change in performance over time and whether action taken to resolve the issues and challenges is effective or not.

At Magic Bus, we have been following this system for the past year. However, we have realised that collecting data is only the first step in M&E. The focus should be on how we use this data in multiple ways so that it becomes relevant for programme teams and benefits the end-user.