The ‘evidence-informed’ paradigm in social and economic development has been transformative for millions of people around the globe. It has nudged governments, nonprofits, and philanthropists away from flawed assumptions about how to reduce poverty or spur economic growth. By rigorously testing different programme options—and then transparently reporting the results—it has helped funders and implementers alike to focus their resources on the most effective approaches to human development.

While the movement has been quite impactful, it may be time to evolve with the times. The current practice of evidence-informed programming often begins with piloting an innovation, and then evaluating it at moderate scale. If the innovation works, it is then scaled up to reach more people. This is a fairly linear path. And it appears to work for interventions that are dominated by a reliable biological or physical mechanism, such as the disruption of malaria infections via bed nets or the prevention of diarrhoeal disease through water chlorination.

Indeed, in the case of bed nets, the core intervention is a chemically treated, physical barrier that prevents transmission of a parasite from its vector (the mosquito) to the human target. There is some human behaviour change required to achieve positive health outcomes, but we now have ample evidence that low-income households routinely and effectively use bed nets to prevent malaria, particularly when the technology is provided at no cost. This suggests that impactful scale-up of the intervention is largely an operations and logistics challenge, perhaps with marketing or public education to sustain appropriate use.1

Now, with interventions that are not dominated by a biological or physical mechanism—for example, interventions that rely exclusively on shifting people’s beliefs or mental models—we think there is a need for a more iterative approach, one that draws on recent advances in technology to enable rapid prototyping and continuous evaluation. The theory of change with these interventions is less deterministic and defined, in part because neuroscience and behaviour are so complex. The variation in each person’s genetics, life experience, and environment can overwhelm the power of a single, generalisable intervention to achieve impact.

At The Agency Fund, we focus on innovative services that help people navigate their lives with self-determination, purpose, and dignity. These include new data and insights to inform people’s choices, as well as counselling to help make sense of life opportunities and experiences. With these interventions, we can increase our chances of success by adopting a more adaptive, contextualised approach to innovation—an approach that rapidly iterates across different ideas, and matches the most appropriate innovation to the person (or persona) being served. The tech sector has excelled in this iterative model of innovation, although tech companies tend to optimise for user engagement and monetisation rather than user well-being.

What do iterative models look like in practice?

One promising iterative model is a reinforcement learning method known as ‘multi-armed bandits’ (MAB). This is a strategy for uncovering the most effective programme option from a pool of many variations through continuous experimentation. Imagine that you have several versions of a nonprofit programme, all of which aim to increase household income. They are similar in nature, but we don’t know how effective they are in the real world. Perhaps one programme is a loan product, another is the loan product linked with insurance, the third programme is the insurance alone, and the fourth and fifth add slight variations to the theme (such as financial education or goal setting). Now, imagine that you also have a large population of people you would like to support with the most effective programming.

With MAB, you want to balance the ‘exploration’ of different programme options (to learn about their effectiveness) with the ‘exploitation’ of the most effective option (to maximise the number of people receiving the most effective benefit). So rather than setting up a static randomised trial that tests a single option at a point in time (or a massive trial that tests all options in parallel), you’ll dynamically assign people to different options over time to maximise your objective (in this case, increasing household income). You will do this while learning which option is most effective.

What does this look like in practice? You’ll start with a modest sample of programme participants. They will be randomly assigned across all programme options. Then, as you observe people’s outcomes, you’ll allocate a fresh tranche of participants. But this time, you will not uniformly distribute people across the different variations (or ‘arms’); instead, you’ll allocate a greater share of participants to the programme options that are most effective in shifting outcomes. With each round of new participants, you’ll observe outcomes in each arm of the study, and then allocate new participants in ways that maximise benefits (while preserving enough power in the remaining arms to monitor changes in outcomes and minimise estimation errors).

This is hardly a new method—it is derived from clinical medicine, with some of the earliest mentions dating to the 1930s. What is novel is the computing power we now have access to, which makes it possible to implement complex algorithms with relative ease. Another promising approach is to cluster participants into different groups, based on observable characteristics, and then allocate them to programme options that have been effective for people like them. This method, made popular in commerce through the development of ‘recommender systems’, can address the heterogeneity of participant outcomes that we typically find in social sector interventions. Recommender systems have also become popular in recent years because of advances in digital data generation and compute power.

Methods like these, which have been optimised by the tech sector, can be used to iteratively ‘search’ the space of potential solutions. They work best for programmes with outcomes that can be measured relatively quickly. And there are other limitations of course.2 But our hope is for nonprofit organisations to be able to continuously trial different options or features of their programmes, moving between ‘exploration’ (that is, testing of new alternatives) and ‘exploitation’ (that is, deeper investment in programmes that are generating positive impacts). Over time, the optimal strategy may change due to changes in the broader economy, environment, or population demographics. And that’s why it is important for organisations to rapidly iterate and continuously evaluate over their lifespans.

Has anyone done this sort of work in practice? We can cite a few examples: Athey et al. designed an algorithm to help women in Cameroon decide which contraceptive options to discuss with their medical providers. They essentially designed a recommender system to guide women towards the products that have proven effective for other women like them. We have seen MAB used to identify effective job search support for Syrian refugees in Jordan. And the nonprofit Youth Impact in Botswana conducts ‘rapid impact assessments’ by running randomised evaluations in the field each month to explore slight variations in their programming for youth sexual and reproductive health.

These are all early examples of iterative prototyping and learning, and we hope that as these methods gain traction, there will be many more case studies for us to learn from.

The role of technology and real-time field data in informing programmes

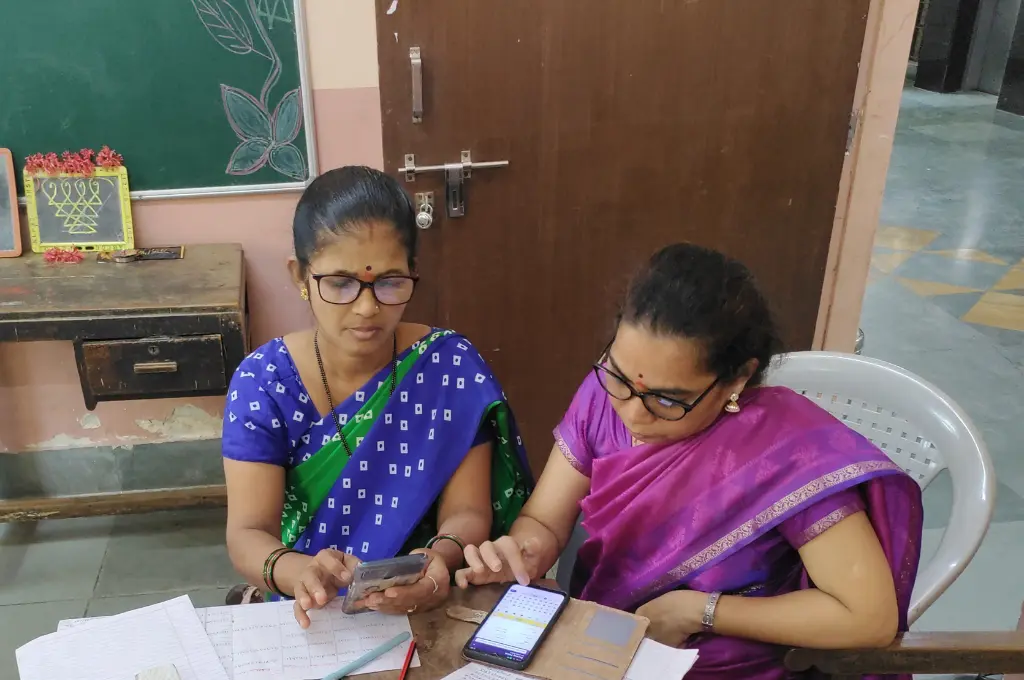

Iterative models require real-time data collection, analysis, and constant feedback loops. In order to tweak programming as you go along, you need excellent staff management and appropriate incentives. And yet, across geographies, we have seen that frontline workers are undercompensated for the work of data collection and data-driven operations. Too often, new technologies for data collection are introduced into their workflows without adequate training or support.

Despite this, we believe there may be some ways to reduce the frictions of data collection, and to increase the value of data generation for nonprofit workers and programme participants alike. In general, the options include:

- Hiring more people (that is, field-based staff) to take on, or at least distribute, the additional burden of collecting data in the field. If a programme needs more data to be collected, the parent organisation needs more staffing to capture the data. There is no free lunch when it comes to generating high-quality data.

- Reducing the amount of data collected by focusing on only essential, high-value metrics that the organisation requires for performance. Too often, organisations collect information that is not credible, actionable, responsible, or transportable (CART principles).

- Reducing technical frictions in the collection of data, either by improving an enumerator’s user experience (through better user interface design) or by automating data capture where feasible (for example, by leveraging user interaction logs from an app).

- Paying enumerators better wages or aligning incentives so that frontline workers share in the benefits of strong data systems.

- Making better use of the data collected, by embedding the resulting information or insights within operations, in order to improve the organisation’s cost-effectiveness.

All of this is hard work, but we’ve seen it in action. Ogow Health, an organisation in Somalia, has been creating electronic health records that can be accessed via WhatsApp. They designed their system to optimise the experience of programme participants and frontline workers. In India, Rocket Learning delivers bite-sized homeschooling lessons to parents and their children via WhatsApp, and then analyses photographs of students’ homework to evaluate performance (and customise future content to the child’s level). Rocket Learning’s model captures the data from programme activities, and then directly feeds the resulting analysis into ground operations at the most granular level (that is, at the level of the household).

How do we change the programming paradigm in the sector?

Funders play an important role in shifting paradigms in the social sector. They need to provide sustained (that is, multiyear) resources to nonprofits to first build up their data generation systems, and then support ongoing experimentation with different learning methods.

Nonprofits, in turn, need to prioritise learning as part of their core business. Initially, they may need to devote up to 15 percent of their annual budgets to programme research and development. (Note: Tech firms routinely spend 15 percent of revenue on these activities, and they are generally trying to nudge people to consume more. In development, we focus on outcomes such as improved health or nutrition, which can be even more challenging to shift. So, at least initially, budgets need to be large enough to accommodate serious R&D).

Tech companies can provide pro bono support to individual organisations that want to invest in becoming continuous learners. But to raise the entire field, we will need playbooks describing how to carry out this sort of work in the social sector. We will also need more open-source software tooling, built just for the social sector—something that The Agency Fund is working on with partners like Tech4dev in India.

—

Footnotes:

- Even with a successful innovation, implementers frequently try to continue learning and monitoring the scale-up of their programmes to capture issues related to the heterogeneity of treatment effects. For example, different people may respond differently to behavioural nudges or information campaigns. This can create variation in outcomes, even when the treatment is well defined overall. However, funding for the appropriate level of ongoing monitoring is not always readily available.

- There is a trade-off between learning about different programme options and maximising welfare for programme participants. This is discussed in Kasy et al. In the context of MAB, you can select parameters that maximise learning (that is, maximise the power to detect differential effects across treatment arms) or you can select parameters that maximise welfare (in which case, you learn as you go but generate less evidence on each of the various programme arms).

—

Know more

- Read this blog to understand what the social sector can learn from tech start-ups.

- Read this paper to understand how development programmes can leverage technology to increase adoption of their interventions.

- Read this article to understand why nonprofits need to prioritise skill-building of their field workers.

Do more

- Learn more about The Agency Fund’s work by signing up for their newsletter here.